Updated on March 27th, 2023

Even though graphics processors were initially intended for gaming, computer science enthusiasts are well aware that they hold significant value in numerous other areas. With supply chain problems gradually being resolved and prices becoming more stable, many individuals are keen to get their hands on the latest NVIDIA GPUs. This blog post is here to help you make an informed decision when selecting the ideal GPU for your deep learning projects!

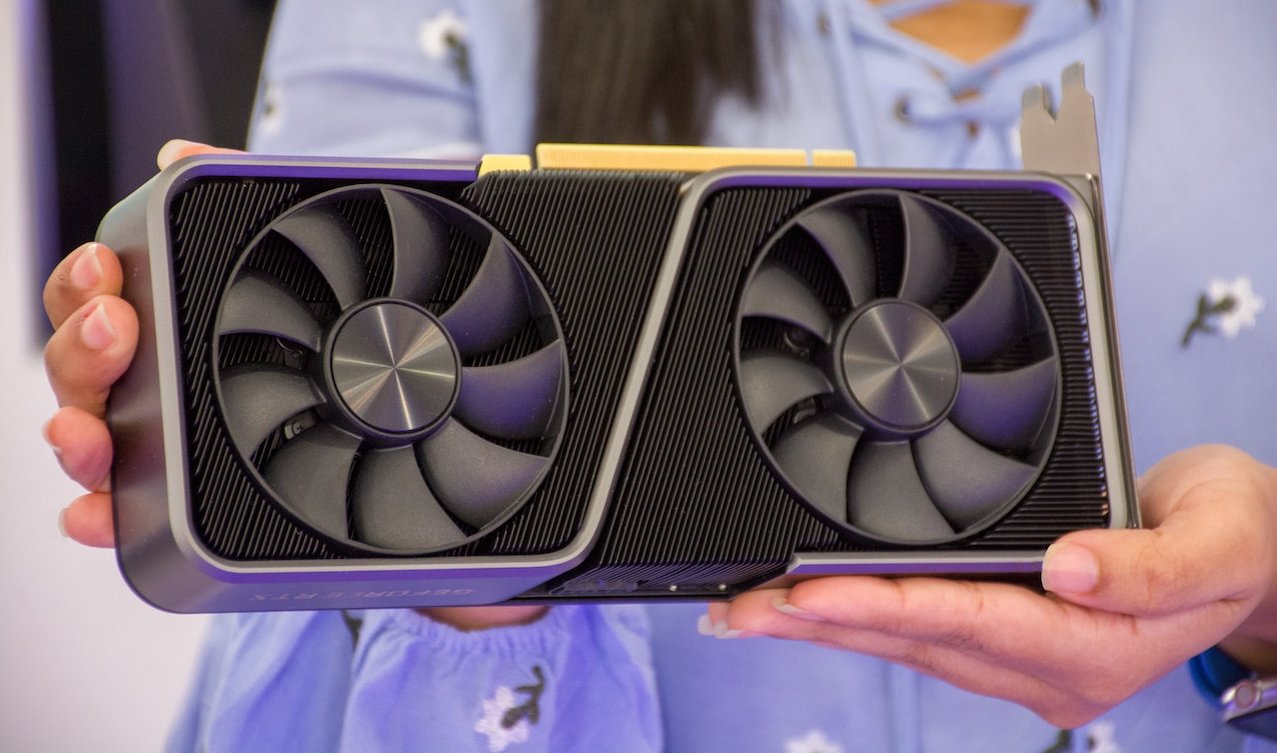

NVIDIA recognizes that gamers are no longer the only audience for their products. Ever since the Volta generation, their GPUs come equipped with Tensor Cores. These cores are specifically meant to supercharge AI workloads. Apart from Tensor Cores, NVIDIA is continually updating its hardware platform as well as its software to provide new features and performance improvements for deep learning. Most of these features are also present in the consumer line-up of GPUs.

Product Tiers

NVIDIA's products are categorized into roughly three categories: consumer-grade, workstation-grade and server-grade. In most cases, the actual chips used in these cards are the same but artificially limited to achieve product diversity across NVIDIA's product line-up. Server-grade cards are meant for use in data centers and high-performance computing clusters. They are usually optimized to be small, so many can fit in a few servers. Second, their power draw is as low as possible since power usage directly affects the bottom line when deploying at scale. Workstation-grade cards are mostly meant to be used in professional industry. NVIDIA targets 3D modelers, game developers, AI developers, and anyone requiring GPUs to do their job. Workstation-grade GPUs are smaller (and less cool-looking) than their consumer-grade equivalents, and their power draw is smaller. Both server and workstation GPUs often come with more VRAM than their consumer-grade counterparts, matching what is often required by professionals. It is also essential to understand that NVIDIA's workstation and server products are licensed for use in "data center" deployments. NVIDIA specifically forbids the use of consumer-grade cards for this use case.

Finally, the significant difference between these three categories is price. To illustrate the difference, let's take a look at three GPUs with very similar performance characteristics: the consumer RTX 3080, the workstation RTX A5000 and the server NVIDIA A10 PCIe. All three cards perform similarly in the number of floating point operations they can do per second. Regarding VRAM, the RTX 3080 has 10GB, and the other two have 24GB. The TDP of the 3080 is 320 watts, compared to 230 for the A5000 and just 150 for the A10. In terms of pricing: the RTX 3080 goes for around €1000,00 in Europe, compared to €2500,00 for the RTX A5000 and €6000,00 for the A10.

What to buy then?

Unless you intend to use your GPU in a data center, consumer cards usually provide the best value. Note that the pricing does not increase linearly with performance. The flagship GPU of a specific generation is not necessarily the best value. If you are on a budget, you can often get a last-gen card second-hand for a much lower price.

We used estimates of the actual market price to calculate "bang for buck" by looking at the sales prices of major retailers in Europe. The market value of a GPU changes all the time, so some of them might be out of date. For cards that are not sold by vendors anymore, we listed the approximate second-hand price. Note, in previous versions of this blog post, we did not use the Tensor Core metric in the performance score. Since Tensor Core compatibility has improved drastically since the last time we updated this post, we now believe that Tensor Cores will greatly impact actual performance for many users, so it is now included in the final calculation.

Note that in the table above, the purple-marked area indicates second-hand prices for those cards. If you don't mind buying second-hand, previous-generation GPUs still provide the best value in terms of performance per euro spent. Interestingly, when looking for new cards only, the RTX 4090 provides the best value, even compared to Ampere cards. This is due to the large gap in terms of raw TFLOPS between the RTX 4080 and RTX 4090. In previous generations, the flagship card was usually not such a good deal. If you don't have that much money to spend, the RTX 4070 Ti is also a good deal, especially with the amount of VRAM it has.

TLDR

These are the updated recommendations (as of March 2023):

- If you have money to spend, we recommend getting the RTX 4090.

- If your budget is slightly less than that, we recommend getting the RTX 4070 Ti.

- If you're okay with buying second-hand, get one or more 2080 Ti's at a good price to get the most performance per euro spent.