Oddity’s aim is to shape the future of safety. Apart from our usual projects in the domain of public safety, we are also working on different ideas. Wikke, one of our best deep learning engineers, is working together with the University of Utrecht to build an algorithm that can detect seizures in newborns.

Seizures are sudden electrical disturbances in the brain. Seizures often cause uncontrolled changes in behaviour, movement and feelings. When someone experiences unprovoked seizures on a regular basis, they are diagnosed as suffering from epilepsy. Epilepsy is quite rare in the adult population, but much more prevalent among young children. Especially in the first months after being born, seizures among babies are relatively common. Seizures in infants can cause long-term health effects. It can hamper their growth, negatively affect cognitive development and in some cases it can be life-threatening. It is very important that doctors can detect seizures quickly, so they can take preventative measures. In most adults, seizures can be detected using heart rate monitoring and other techniques. In infants, these methods are not as effective. In many cases, parents need to monitor the newborn 24/7, which is very stressful.

Wikke is using computer vision techniques to monitor babies that suffer from epilepsy. This could relieve a lot of pressure on the parents of the child, and possibly make detection more accurate. Hopefully, this allows parents more time for themselves during the day, and frees up time among nurses. Such an innovation could mean a lot for parents whose newborn suffers from epilepsy. In this blog post, we will be looking at some of the techniques that can be used to monitor infants and detect seizures effectively.

Human Pose Estimation

One symptom of seizures that is the most easy to discern visually, is the sudden and uncontrolled movements of arms and legs. For humans, recognizing these movements is natural. For computers, it is a much harder problem. Over the past years, great progress has been made in this area.

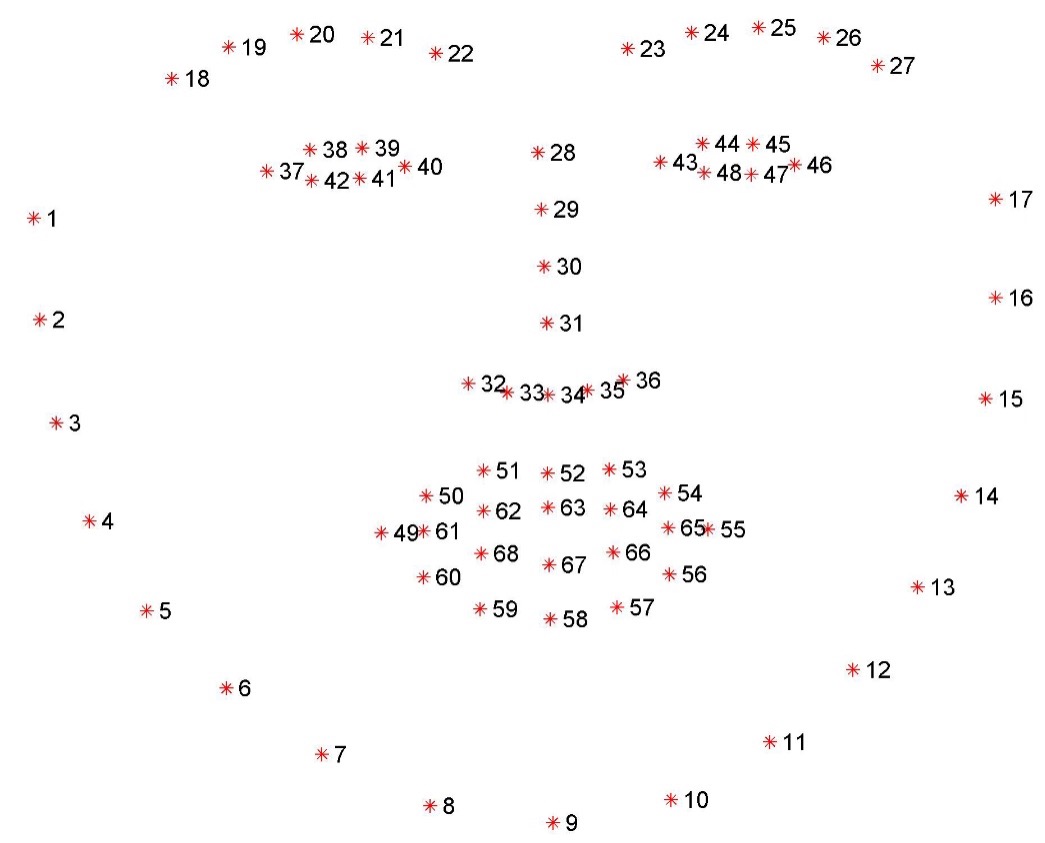

First, we designed a pose estimation model to estimate the position of "key points" in images. For example, the model can predict the location of the wrists, ankles, nose and others in a photo. By "feeding" the model a large quantity of pictures, as well as the correct location of each of these key points, it learns to identify those key points without any human help. Instead of giving the model the exact coordinates of these key points, we give it a heatmap that shows the most likely location, to allow for a bit of uncertainty.

Taken from Newell, A., Yang, K., & Deng, J. (2016) [1]

After a model has been trained, it can be given new images, and the model will produce heatmaps. From this, we can extract the most likely position of each of these key points. We did some extra work to modify existing pose estimation models so that they work well on babies, since their anatomy is very different from adults.

With the pose estimation model, we can find the positions of key points like wrists and ankles. In turn, this enables the system to track the motion of the arms and legs of the infant. By analyzing the observed motion, the system can learn to differentiate between convulsions, which are caused by seizures, and normal random infant body movements. However, not all seizures are characterized by such abnormal movements. For example, hypomotor seizures are characterized by the marked reduction of movement. This type of seizure is therefore more difficult to detect by pose estimation. However, the marked reduction in movement could also be observed by the same tracking process. Instead of observing abnormal motion, the system can try and detect abnormal lack of movement.

A second application of the pose estimator is the tracking of several key anatomical sites so that they can be brought into focus for other computer vision techniques. By knowing the location of, for example, the nose, we can crop the larger frame to end up with a frame where only the face of the infant is visible. This will prove to be a valuable capability when we discuss the application of the remaining two vision techniques: facial alignment and motion magnification.

Facial Alignment

Facial alignment is similar to pose estimation, only now applied to facial features. With facial alignments, we can track "facial landmarks", like the corners of the eye, tip of the jawline and tip of the nose. The picture below shows these facial landmarks.

Taken from pyimagesearch [2]

Like pose estimation, we use heatmaps to learn a model to identify and track these landmarks. We can use the information from the pose estimation model to find the position of the infants face in the image. Then, we apply facial alignment to identify more accurately the exact position and alignment of their face.

With this information, we can detect more subtle characteristics of seizures. Some types of seizures can be identified by excessive repetitive blinking and chewing-like movement of the mouth. Often, these are expressed at a constant frequency, which can be estimated manually after extracting these movements. Similar to pose estimation, we needed to modify existing facial alignment techniques to improve accuracy on infants.

Motion Magnification

Some seizures affect the heart rate and breathing of the child. In more extreme cases, these can cause the blood saturation level to drop to dangerous levels. Being able to monitor heart rate and breathing rate would be very valuable in detecting the most dangerous types of seizures.

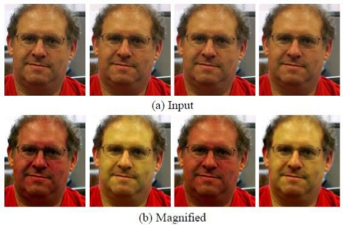

Whilst humans are often really good at detecting the previously mentioned characteristics of seizures, in the case of heart and breathing rate, this is not the case. Surprisingly, it has long been known that this information can feasibly be extracted by computer vision techniques. The flow of blood due to the heart pumping causes a tiny change in the color of the face. This effect is not visible to humans. By magnifying the color changes in an image digitally, computers can make these subtle changes in color visible. Similarly, the subtle movement of the chest due to breathing can be magnified as well to calculate the breathing rate.

The image below demonstrates what color magnification looks like to visualize blood flow.

Taken from Wu et al (2012) [3]

By counting the time between each "peak" of redness, we can extract the heart rate. This also works for babies, and provides an important datapoint for detecting seizures.

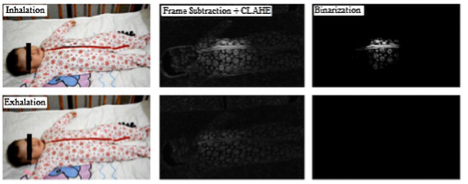

Motion magnification is a little different. It is used to magnify tiny motions in the image so they become visible. The image below shows motion magnification in action.

Taken from Al-Naji, A., & Chahl, J. (2016) [4]

Like before, we measure the time between each "peak" to calculate the breathing rate. In this case, the peak is marked by greatest expansion of the chest. Any significant deviation from the expected breathing rate could indicate that the child is suffering from a seizure. Both of these techniques are valuable, but are limited by the quality of the recording, lighting changes and other hard to control circumstances.

Conclusion

There's been a great deal of research over the past years in various deep learning techniques. Building on these, it might finally be possible to let computers do the work of humans. In the case of epilepsy in infants, such a technology could provide great relief to parents and free up time for nurses. In this blog post, we discussed some of the underlying techniques and showed how they can be applied to the detection of epilepsy.

Currently, Oddity is continuing research in this area to see if we can combine all this information to accurately detect seizures, like a human would. If successful, we can hopefully get this technology to hospitals and homes, where it could have a lot of positive impact. Before this technology can be applied in practice, additional research in the usability, safety and accuracy is required though. Currently, the project is a proof-of-concept.

If you are interested in our work, or feel you can contribute in any way, we would love to hear from you. Get in touch!

References

- Newell, A., Yang, K., & Deng, J. (2016). Stacked hourglass networks for human pose estimation. European conference on computer vision (pp. 483-499). Springer, Cham

- pyimagesearch (pyimagesearch.com)

- Wu, H. Y., Rubinstein, M., Shih, E., Guttag, J., Durand, F., & Freeman, W. (2012). Eulerian video magnification for revealing subtle changes in the world. ACM transactions on graphics (TOG), 31(4), 1-8.

- Al-Naji, A., & Chahl, J. (2016). Remote respiratory monitoring system based on developing motion magnification technique. Biomedical Signal Processing and Control, 29, 1-10.